Animeloop: animation loop recognition

PDF: https://s3.moeoverflow.com/animeloop-production/static/technical_report_en.pdf

Abstract

This article presents a method for identifying loops in anime video. By finding the same frame in the source video to determine the begin and end timepoint of the loop can be fragmented, roughly get the matching loop fragments, and then through some specific filters to filter out the meaningless fragments, and ultimately come to effective loop fragments.

Keywords

Anime, Loop, Video, GIF, OpenCV

Preface

This article presents a method for identifying loops in anime video. By finding the same frames in the source video to determine the beginning and end timepoint of the loop can be fragmented, roughly get the matching loop fragments, and then through some specific filters to filter out the meaningless fragments, and ultimately come to effective loop fragments.

This method is only for the anime video, is due to the special anime production process that the key animator draws the key frame and the program complete the full frames, so the video will have a great chance to find almost exactly the same two frames.

Picture 1: From Nichijou OP1

Target results

loop fragments, that is when playing a video, can't figure out the beginning and end timepoint of the fragment, the entire play can be unlimited. The judgment of the loop fragment is to determine whether a video first frame and the last frame are the same. (Not as long as similar enough, need to be similar to the level of high to almost exactly the same)

But in fact, rely on such a single judgment to identify and clip out of the loop, there will be a lot of meaningless results. The classification of the loop fragments can be roughly different from the perspective of the transition of the scene, the lens following, the change of light and shade, the foreground action:

- Light and dark scenes changes, from the content of the picture out of the black (white) screen or from the black (white) screen into (need to filter out the results)

- Cuts Switch, Jump from one scene to another or multiple times in a short time cut jump (need to filter out the results)

- Motion of the lens, the action of the foreground person (or object) in the case of a unidirectional translation of the background (need to filter out the results)

- Motion of the lens, the background moves back and forth or jitter, the prospect of people (or objects) action (expected results)

- Foreground action - the action of the foreground person (or object) in the case of a background (expected results)

Three Fingerprint Hash Algorithms

Simply image fingerprint hash is a set of binary numbers or strings that calculated according to the distribution of image pixels and a certain hash algorithm. this set of binary numbers or strings is called the image of the fingerprint hash. Fingerprint hash can replace the original picture, for more convenient comparison.

Average hash algorithm

Average hash algorithm, hereinafter referred to as aHash.

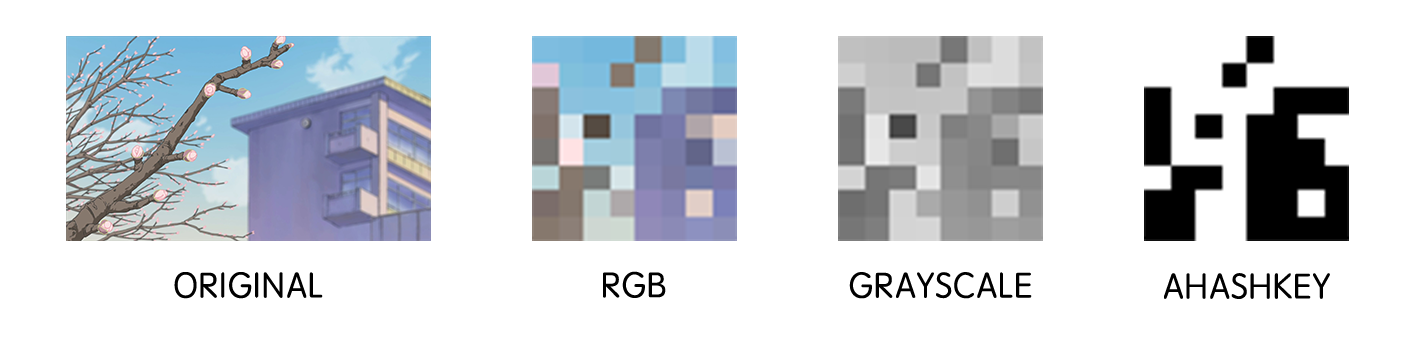

aHash is by comparing each pixel with the average of all pixels in gray scale. the general steps are as follows:

- Resize the picture to suitable size (8x8 in general)

- Convert pictures to 256 levels of grayscale

- Calculate the average of all pixels

- Compare each pixel with the average of all pixels in gray scale, if the pixel is more than the average, then record as 1, and vice versa 0

- The recorded values obtained in the previous step are combined in order to form a certain length of information fingerprint

Picture 2

Perceptual hash algorithm

Perceptual hash algorithm [^ 1], hereinafter referred to as pHash. pHash(s) describe a class of comparable hash functions. Features in the image are used to generate a distinct (but not unique) fingerprint, and these fingerprints are comparable.

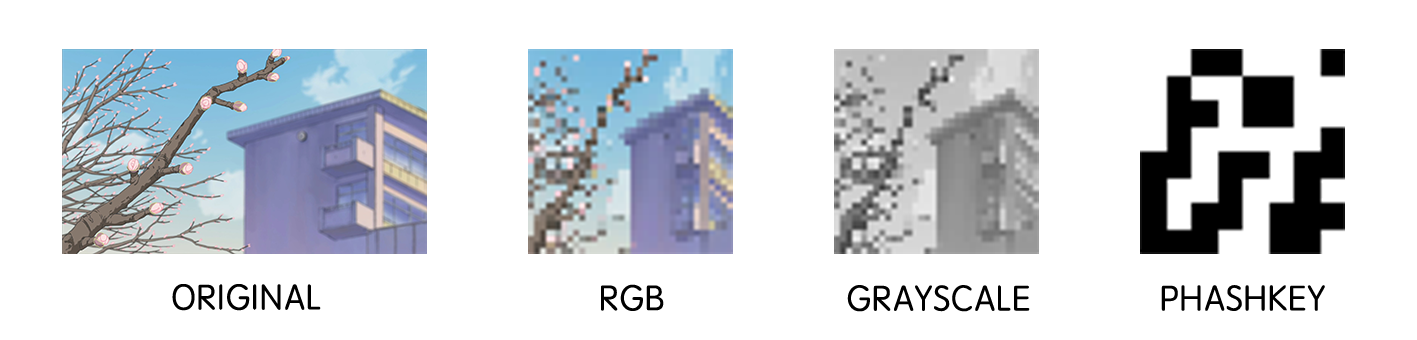

pHash based on each row of pixels before and after the gradient to achieve, the general steps are as follows [^ 1]:

- Resize the picture to suitable size (8x8 or 32x32 in general)

- Convert pictures to 256 levels of grayscale

- Calculate the value of DCT (Discrete Cosine Transform). DCT separates the picture into a collection of frequencies and scalars.

- According to the 32x32 DCT value, the upper left corner 8x8 matrix pixels are flattened into 64-bit fingerprints. A very magical step in comparing each DCT value to the average of all DCT values, or 0 if it is greater than the average.

- Constructs a hash fingerprint based on the 64-bit bitmap. Set the 64-bit bitmap to a 64-bit long integer as a hash fingerprint.

Picture 3

Different hash algorithm

Difference hash algorithm, hereinafter referred to as dHash. DHash is based on the adjacent pixel gradient implementation, the specific steps are as follows:

- Resize the picture to suitable size (typically 8x9)

- Convert pictures to 256 levels of grayscale

- Take each row of pixels, adjacent pixel gray value to do comparison, if the former is greater than the latter, recorded as 1, otherwise 0

- The recorded values obtained in the previous step are combined in order to form a certain length of information fingerprint

Picture 4

The above three algorithms are used to identify similar images, but because of the realization of different principles, the actual effect of the identification of the focus is not the same. aHash is more suitable for similarity recognition of thumbnails, and pHash recognition ability is more accurate, dHash is more suitable for identification of similar image recognition because of its principle adjacent pixel gradient.

The choice of fingerprint hash algorithms

In contrast to the usual practice of identifying similar images, it is necessary to have more accurate identification accuracy in order to find better loop segments. This precision is mainly reflected in the fact that the two pictures before and after the translation of scene or cropping should be judged to be similar but not identical.

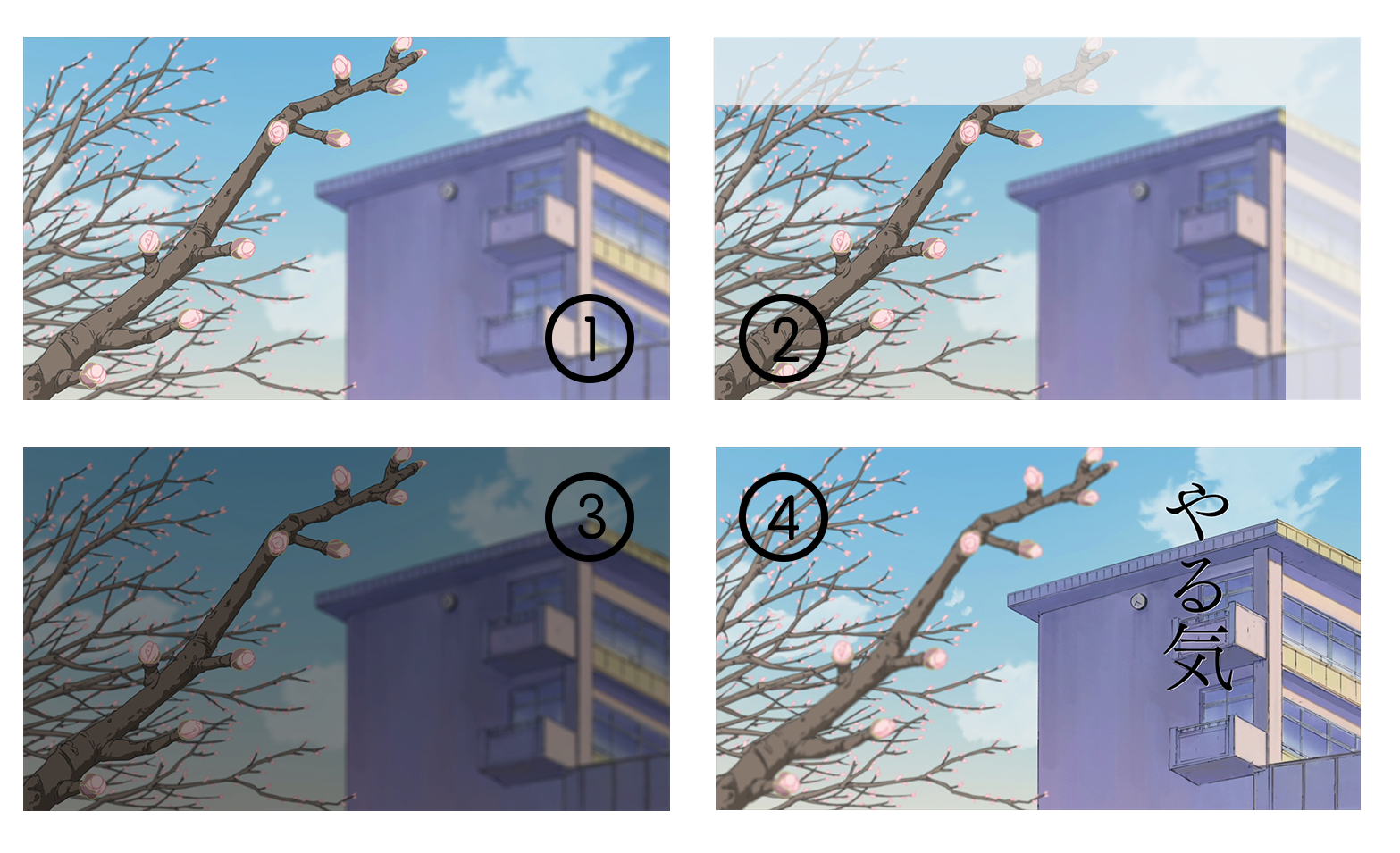

Picture 5: From Nichijou episode 1

P1 is the original; P2 is cut off part; P3 brightness darkens; P4 has text to join. These four pictures can be said to be similar, but can not be said to be the same.

In order to test the similarity of the three hash functions, the aHash, dHash and pHash values of various sizes are calculated, and the similarity between P1 and P2, P3, P4 is calculated. See Table I, Table II, and Table III. Table values represent the similarity between P1 and Px($x \in 2, 3, 4$). The larger the value, the higher the similarity.

| P1 | P2 | P3 | P4 |

|---|---|---|---|

| 8x8 | 0.562500 | 1.000000 | 0.984375 |

| 32x32 | 0.692383 | 0.995117 | 0.944336 |

| 64x64 | 0.676758 | 0.998047 | 0.958740 |

Table I: aHash picture similarity

| P1 | P2 | P3 | P4 |

|---|---|---|---|

| DCT8/8x8 | 0.593750 | 1.000000 | 0.937500 |

| DCT8/32x32 | 0.640625 | 1.000000 | 0.921875 |

| DCT16/32x32 | 0.589844 | 0.992188 | 0.878906 |

| DCT16/64x64 | 0.625000 | 1.000000 | 0.953125 |

Table II: pHash picture similarity

| P1 | P2 | P3 | P4 |

|---|---|---|---|

| 8x8 | 0.609375 | 0.968750 | 0.890625 |

| 32x32 | 0.551758 | 0.924805 | 0.819336 |

| 64x64 | 0.523926 | 0.915527 | 0.806396 |

Table III: dHash picture similarity

We want to be able to enlarge the similarity gap between P1 and P2 in Figure 2 by comparing the fingerprint hash, and narrow the similarity between P1 and P4. It can be seen that the pHash DCT16 / 64x64 is better for differentiating pictures or having different local effects, and can reduce the effect of local variation on similarity judgments, and only the color change can not be distinguished. Therefore, we can use the pHash DCT16 / 64x64 as a method of calculating the fingerprint hash, and when comparing two pictures, calculate the Hamming distance to calculate the similarity, if the similarity is greater than or equal to 0.98 (this threshold is a magic number, For different data can be freely adjusted), you can get two pictures the same. After that we only need to add a filter to filter out the similar images in different color shades.

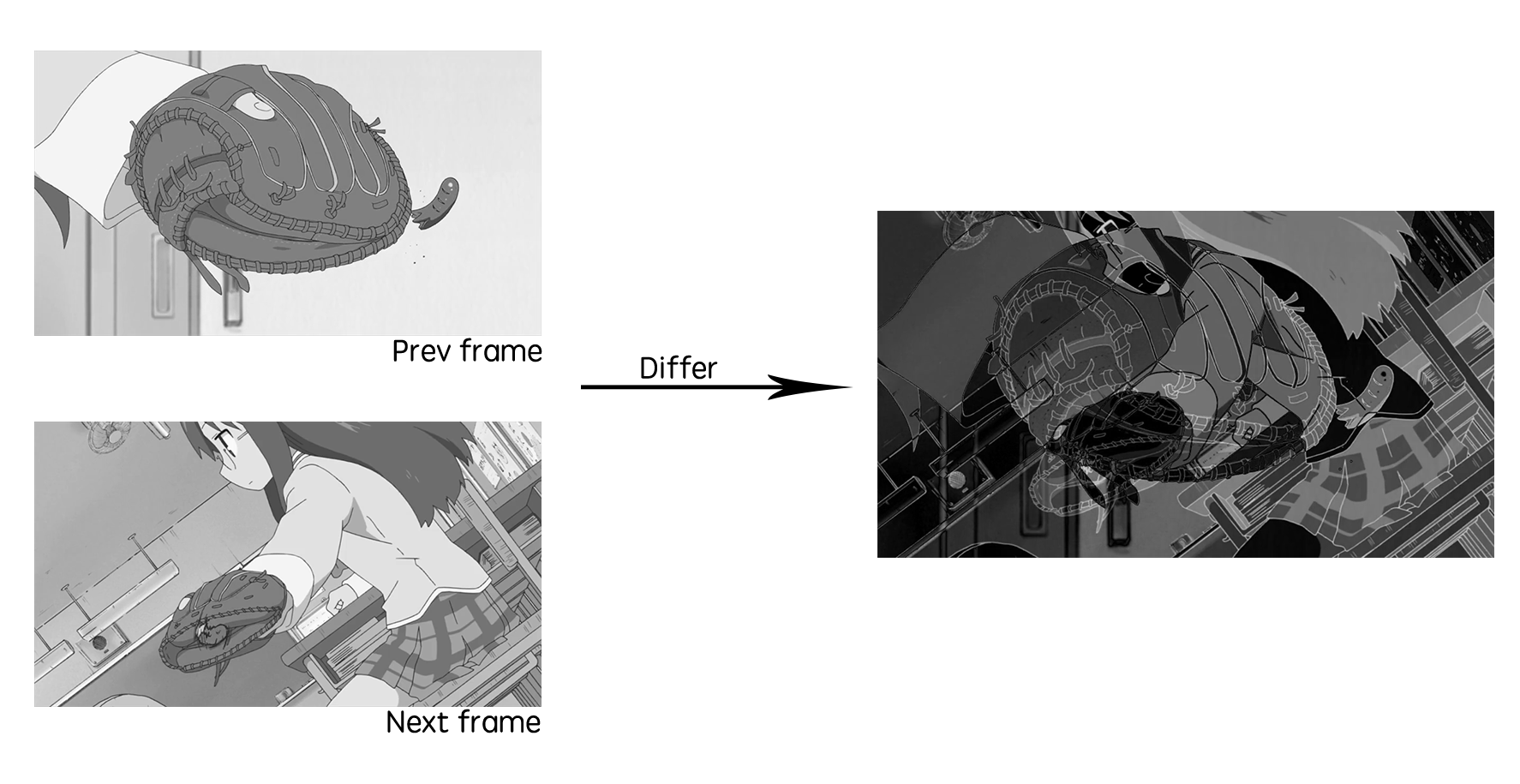

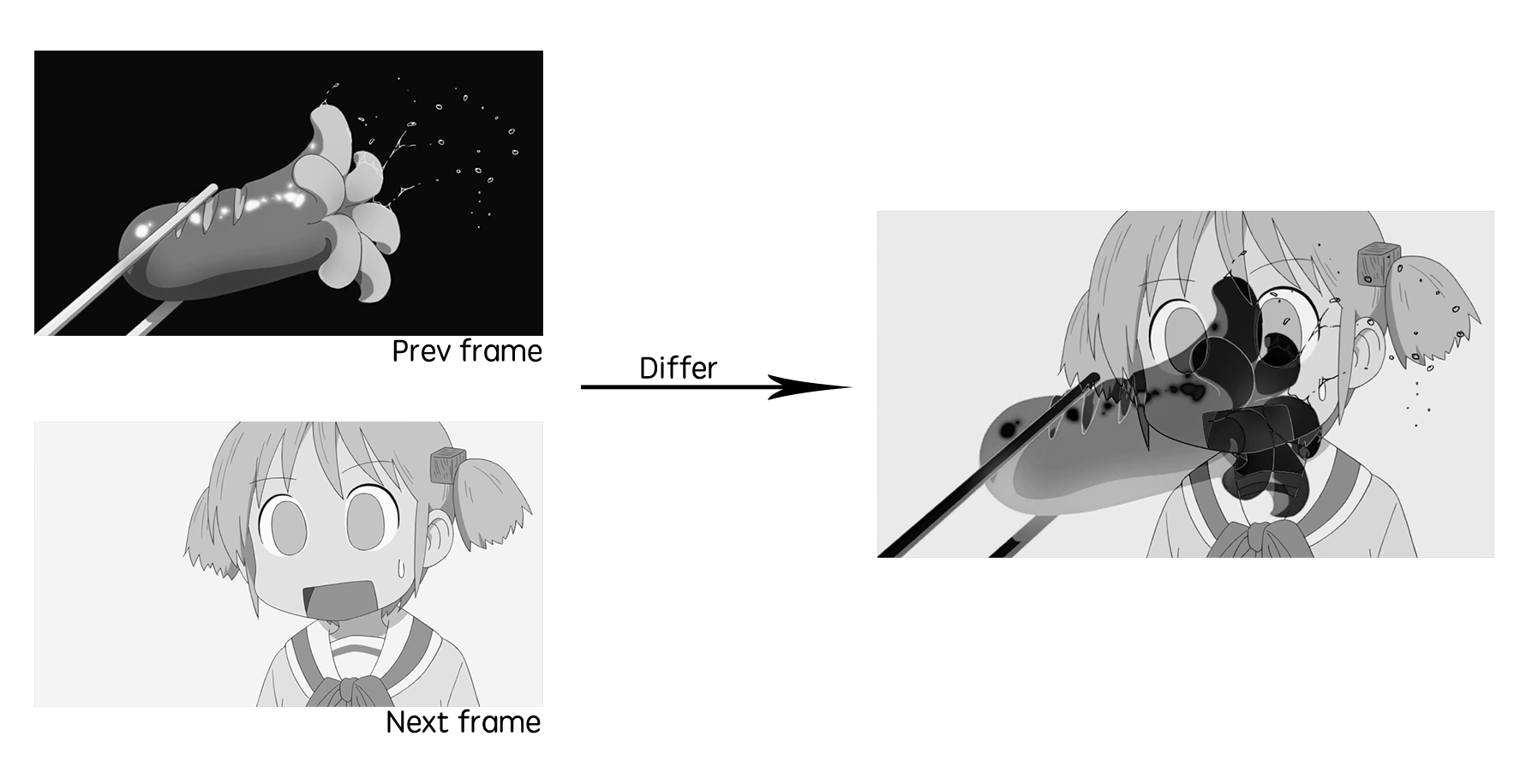

Distinguish the cuts switch

In order to be able to find loop in one cut, you need to first find out the beginning of the end of the time point in every one cut. Here we can use the frame difference method to distinguish the cuts switch - calculate the absolute value of the difference between the adjacent two pixels, the proportion of the higher proportion of non-black pixels accounted for, then the adjacent two pixels changes more, Thereby determining that the lens is switched. See Picture 6 and Picture 7.

Picture 6

Picture 7

The specific steps of the frame difference method are as follows:

- Compress the video to suitable size (typically 64x64 size)

- To iterate through the adjacent two frame images

- Convert pictures to 256 levels of grayscale

- Obtain an absolute difference value for the two images, and obtain a new frame difference image

- Calculate the proportion of non-black pixels in the newly acquired frame difference image, if it is greater than 0.85, then there is a lens switch; otherwise, no lens switch.

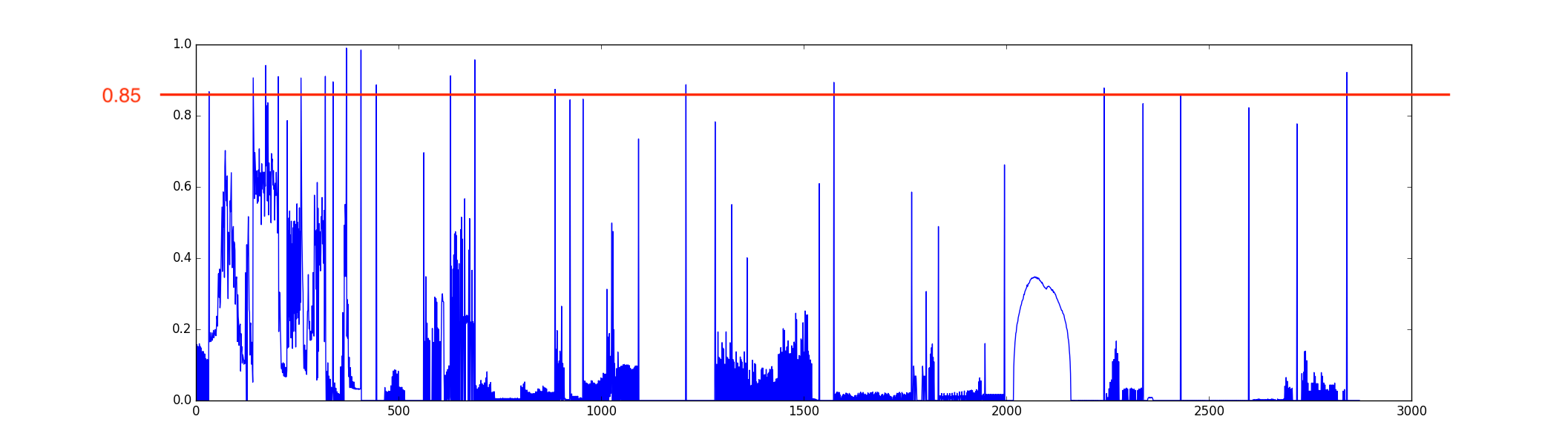

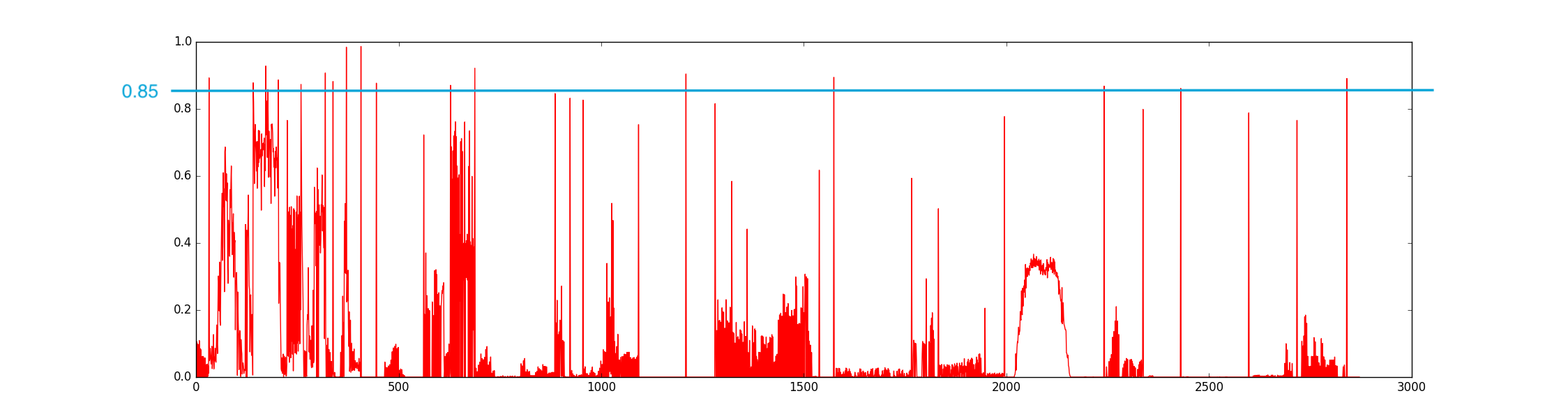

(See Picture 9), we can see that there are some very significant steep additions, we take the ordinate 0.85 (this threshold is also a magic number) Above part of the steep increase in the vertex, combined with video playback eye observation found that these points on behalf of the abscissa is just the time to switch the lens point.

Tips: compressed video size before and after the calculation of the results are basically no difference, after compression, the calculation speed becomes faster, see Picture 8, Picture 9.

Picture 8: the proportion of non-black pixels in Full sieze (1920x1080)

Picture 9: the proportion of non-black pixels in 64x64 size

Identify loop fragments

Cache frequently used data

Before analyzing the identification, first need to use the data cache:

- 64x64 size source video files

- pHash value of each frame of source video

- The timepoints at which the lens is switched

Find the same fragment as the start end frame

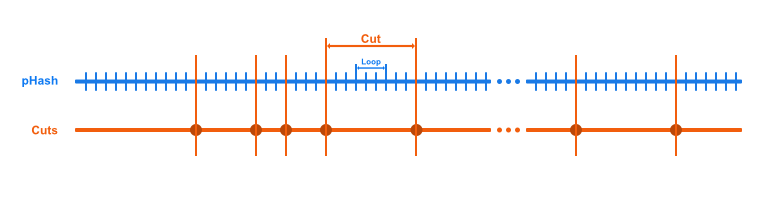

Picture 11

The two horizontal lines represent the sequence of video frames, the blue short bar represents the pHash value of each frame, and the orange long vertical line represents the time point of the lens switching.

It is first necessary to determine the minimum length (kMinDuration) and the longest length (kMaxDuration) of the length of the loop segment to be found. The main method of screening the matching loops is that the double cycle traverses any two frame frames within the limit length to determine whether the same, if identical, that is a circular fragment.

Look specifically at the following pseudo-code:

for i in 0..<(frames-kMinDuration):

for j in kMinDuration..<kMaxDuration:

hash1 = phash(frames[i])

hash2 = phash(frames[i+j])

similar = 1 - hamming_distance(hash1, hash2) / double(64 * 64)

if (similar >= 0.98):

// loop fragment: i ~ i+j

Filter: remove the loop have cuts switch (optional)

To determine whether the loop fragment contains cut switch timepoint? There are one or more cuts switch timepoint, if any, remove the fragment.

Filter: remove fragments with similar content

It is judged whether or not the begining and end frame are similar, and if they are similar, the previous clip should be removed.

Filter: remove loop fragments with small tiny dynamic levels

Calculate the similarity of all frames in two segments, and calculate the variance of the resulting similarity set. This variance value represents the dynamic degree of the slice. Variance is small, indicating that this segment is small, it should be removed.

Filter: remove the beginning of the end of the frame for the black and white solid color of the fragment

Some of the identified loop fragments start from a black or white solid color screen (usually more appearing in the OP, ED), calculate the number of black or white pixels in the end frame of the loop fragment as the total number of pixels, If this proportion is large, then the beginning of this fragment that the screen is white or black, should be removed.

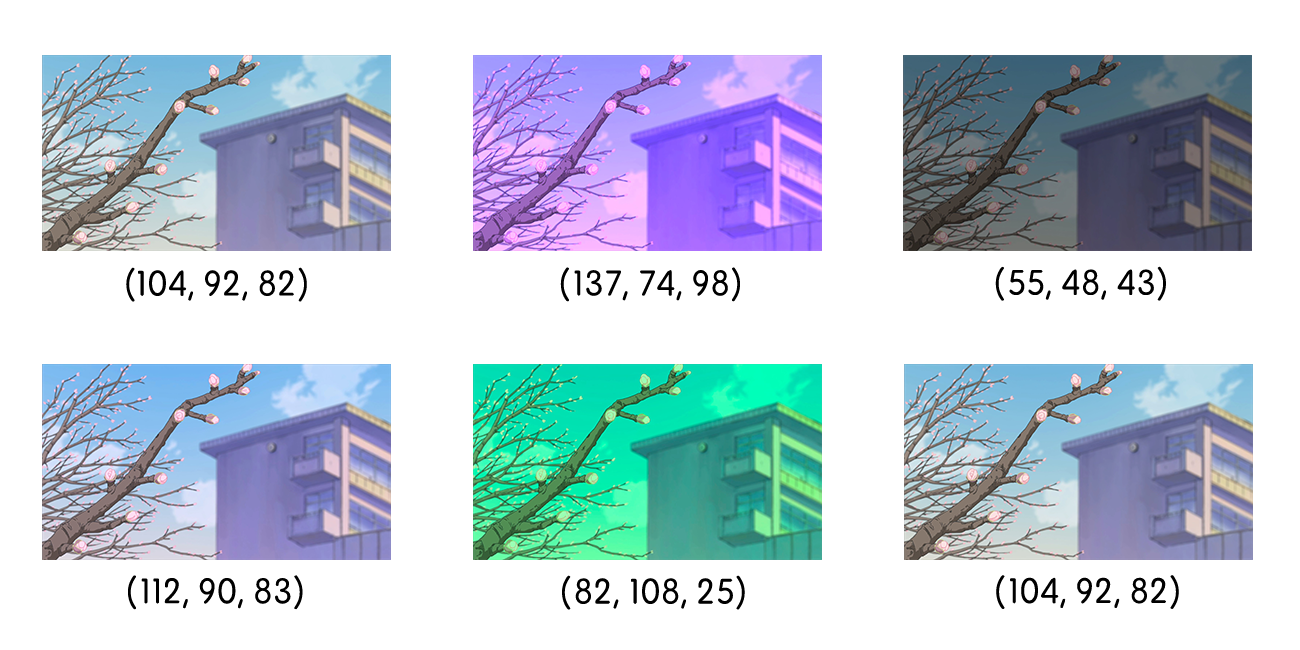

Filter: remove fragments of color inconsistencies

To determine whether the first and last frame picture color is consistent, if not consistent, then exclude the loop fragment. (Mean_g, mean_b, mean_r), if the two pictures to calculate the same average, then the color consistent, see Figure 12.

Picture 12

Implement

File structure

- main

- animeloop-cli

-

- loop_video.cpp/hpp

-

- algorithm.cpp/hpp

-

- filter.cpp/hpp

-

- utils.cpp/hpp

-

- models.cpp/hpp

- cxxopts

- jsoncpp

- research

Source code

visit at Github repo link.

https://github.com/moeoverflow/animeloop-cli

git clone https://github.com/moeoverflow/animeloop-cli.git

cd animeloop-cli

git ckeckout 785c7c89218769f851699a450d37ce0cb1e2668a